Introduction

Containers allow applications, code, and it’s dependencies to be packaged into a lightweight image. These can be deployed to different servers and environments while running the application with the same results every time. In addition, containers offer a great deal of flexibility when selecting the infrastructure, environment or service you choose to host your containerised application.

Amazon Elastic Container Service (ECS)

The AWS container orchestration service, Amazon Elastic Container Service (ECS), simplifies running Docker containers on AWS by managing the provisioning and deployments of containers. Amazon ECS provides a highly available, highly scalable container solution that manages them across a single or multiple hosts. The ability to apply load balancing across multiple containers is a key feature of the service which dynamically registers containers to a specific load balancer, listener, and target group to accommodate cycling, deployments or scaling events.

Container settings and configuration are predominantly managed by Task Definitions and Services. Services orchestrate the containers by cycling unhealthy containers, enabling the management of how the containers scale, and the network configuration such as VPC, Subnets and Load Balancer settings.

Task Definitions handle the configuration of the container image and are associated with the containers service. Container port, mount points, logging configuration such as exporting all logs to CloudWatch Logs and pulling images from either DockerHub or AWS’ Elastic Container Registry (ECR). Environmental variables are set within the Task Definition to bake variables into the container on deployment. These can be stored either in plaintext or as sensitive data that can be securely stored and retrieved from AWS Secrets Manager.

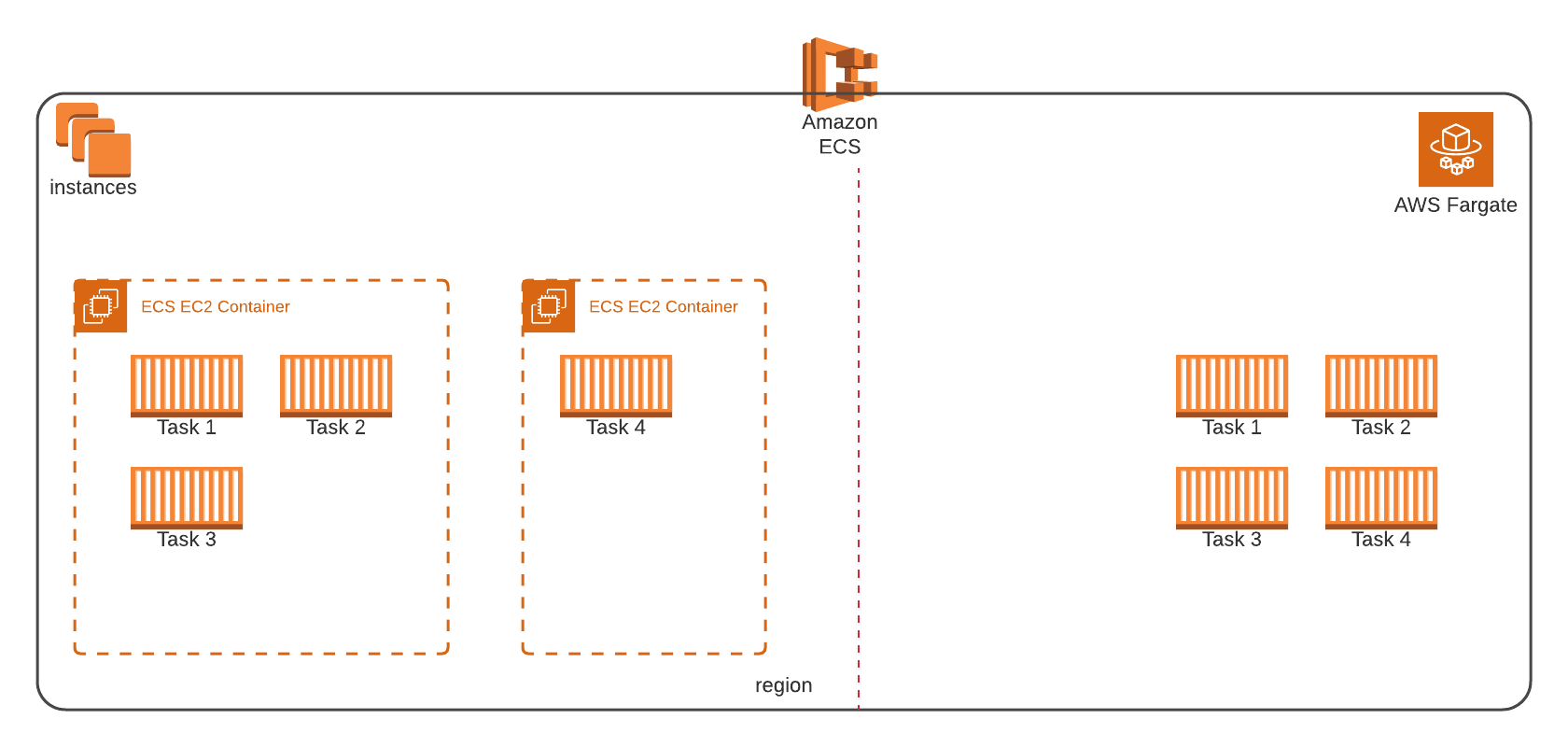

Traditionally, Amazon ECS offers containers to be deployed directly onto an Amazon EC2 Instance which allows full access to the underlying infrastructure. That deeper level of access also comes with the overhead that requires the typical operational management of maintaining an Amazon EC2 Instances such as provisioning, monitoring, scaling and patching. Some of these tasks can be simplified by utilising AWS Services such as AWS Systems Manager’s Patch Manager to automate patching. Utilising Serverless Architecture can assist in mitigating these operational management overheads with the AWS ECS Fargate option.

Serverless Containers

Serverless is a cloud native architecture which shifts the responsibility of operating, maintaining and patching servers a thing of the past. AWS fully manages key infrastructure tasks such as provisioning, patching, upgrading, and capacity provisioning allowing developers to entirely focus on developing and getting the most out of their applications.

AWS Fargate removes the need to provision, manage, and scale Amazon EC2 instances. A server architecture requires containers, otherwise known as tasks, to be launched onto EC2 instances with the ECS Container Agent installed. Additional configuration can be applied to an autoscaling group to scale the number of instances based on capacity. With Fargate, tasks are launched, scaled and run on fully managed servers removing the need for developers to manage the underlying infrastructure with only the docker image, CPU, and memory being defined as outlined in the diagram below.

CirrusHQ has a breadth of knowledge on deploying, running, and supporting workloads using Containers on AWS. If you would like to find out more from CirrusHQ regarding your docker containers on AWS, feel free to contact us via our contact page.