What is a container?

A container is a unit of delivery for software packages which contain the necessary libraries and configuration files required for the application to run in an out-of-the-box manner on a host operating system. Docker is the preeminent set of products which use containerisation at its core. In the case of docker, the host operation system will require the Docker Engine to be installed.

By its name, containerisation implies portability. Indeed, as a visual aid, containers are imagined as shipping containers. This is a very deliberate form of imagery as containerisation is a very portable method of packaging and distributing software.

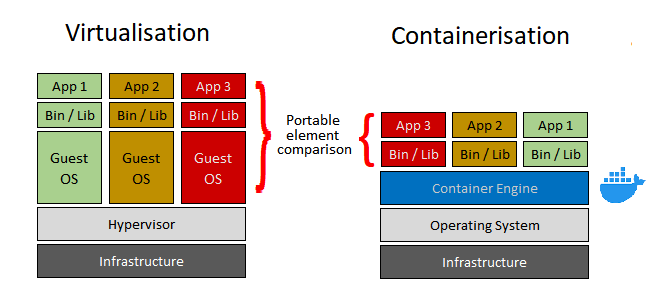

Containerisation can be seen as a more lightweight alternative to virtualisation. In a “traditional” virtualised environment, a hypervisor and guest OS VM would be required to be placed on the underlying infrastructure before any application-specific libraries and the applications themselves are built or imported.

A containerised environment differs in that multiple applications can sit upon a single container engine upon a single host operating system, without the need for a hypervisor. The container engine can efficiently isolate the dependencies required for the application when creating a container. The container itself would be smaller to transport than an entire VM or image, with all its extraneous parts of the OS that would invariably have to be shipped with it.

What are containers used for?

Containers are used in scenarios where there is a need for portability, faster deployment, high scalability and inter-compatibility. For example, a container created by someone using a Windows OS can run on any flavour of Linux which has the Docker engine installed just as easily if it were also deployed on another Windows machine. They are also a favourable option for customers who wish to improve on traditional virtualisation options but wish to remain vendor agnostic and cut down on VM licensing costs.

They are also becoming increasingly used within cloud computing on platforms such as AWS and they provide a reliable encapsulation method when used in continuous integration and continuous deployment (CI/CD) methodologies.

How do containers differ from Lambdas?

Considering Docker, the first word that springs to mind is “containerisation”. For Lambda, the key word would be “serverless”. However, both Docker and Lamba can be regarded as serverless given how multiple deployments of containers over a number of distributed machines, of different OS, can be argued as a form of de-centralisation.

Containerisation still requires initial work to be carried out to create the container that can be deployed or distributed. Lambda code which is developed can be made live instantaneously.

With Lambda, scaling up or down is not a consideration for the administrator as Lambda’s own infrastructure is geared towards varying levels of usage, with customers charged per execution of code – true benefits of serverless computing. When compared to containers, you would find lambda is better suited when the scope of its code is limited to the AWS-based service it interacts with, e.g. S3, DynamoDB, SQS.

Containerisation is better suited to situations where code which uses an existing service or tool, such as Apache, which has to be configured before the working code build is containerised. Scaling up and down, or orchestration, of Docker containers can be managed through another open source tool called Kubernetes. This allows for a high level of automation to be applied which defines the conditions for automatic scaling.

Many vendors have ready-made Docker containers of open source and commercial software available in much the same way as AMI customer images are available for use in Amazon Elastic Compute (EC2).

How can containers accelerate delivery?

Containers are especially suited to microservices. Their smaller footprint than conventional images makes it more justifiable to dedicate a container to more defined, limited functions, rather than dedicate an entire OS VM or image. This makes it easier for more containers to be deployed faster than conventional OS images when scaling up resources to meet demand.

AWS ECS (Elastic Container Service) is the AWS solution for combining serverless principles with containerisation flexibility. It is to containers what EC2 is to OS images.

For management of existing clusters through Kubernetes, AWS offer EKS which removes the need for maintaining a Kubernetes application

Embracing microservices through containerisation in a development environment can bring numerous benefits. As microservices promote the greater use of APIs between integral parts of a code deployment, single containers amongst many can be modified rather than a significant chunk of the entire code. With all components talking to each other through API, it can allow a newcomer coder to be able to work on one element without needing to know the history of the code before them or the entire code base – just what’s to go in and what’s to come out. Changing or modifying containers can be platform and language agnostic also. Allowing a developer to choose their preference.

Microservices can also be managed much more easily within a CI/CD pipeline with smaller items able to move from left-to-right far quicker than, say, a more complicated piece of sprawling legacy code pulling in numerous third-party libraries

In summary, containers offer:

- Great flexibility

- Faster scaling

- Smaller data footprints

- A vendor-agnostic platform

- Potentially leaner development cycles

If you would like CirrusHQ to advise you on how containerisation can help you improve efficiency and productivity, feel free to drop us a line at info@cirrushq.com or via our contact page.